I Don’t Want AI to Replace My Mind. Here’s My Simple System + 17 Prompts

How we can stay thinkers in an age of machines that can think too

Sponsor Spotlight: Guidde ✨

Some processes are easier to show than explain.

Guidde helps you turn any workflow into a shareable step-by-step video guide in minutes. Just hit record in their browser extension, and it automatically adds screenshots, highlights, captions, and you can even add voiceover.

Great for onboarding, customer support, content creation, or just cutting down those endless “can you show me how?” pings.

The best part? The extension is free to use.

Is AI the end of thinking?

I recently saw a LinkedIn post about how people refuse to use AI chatbots because it will make them dumber, and it's not the first time I've heard this sentiment echoed. It's actually everywhere, from Reddit threads to quiet conversations with colleagues, even from my sixteen-year-old neighbor who's convinced that using ChatGPT will atrophy his brain.

And I share some of these fears too, even as I write about AI every week. Even as I use these tools daily. Even as I've built part of my work around helping others navigate this technological shift.

I hold two truths at once: I believe AI can amplify human thinking when used right, but I also catch myself reaching for easy answers instead of wrestling with hard questions. I've noticed the subtle pull toward intellectual passivity, the way my mind relaxes when I know AI can handle some of the heavy lifting.

But I know that refraining from using the technology is not the answer either.

While we debate whether AI will make us dumber, the world around us is already reorganizing itself around these tools. Our competitors are using them. Our students are using them. The problems we need to solve are becoming more complex, not less.

Choosing to abstain isn't principled resistance, it's choosing irrelevance. We'd be sidelining ourselves while the challenges that require our best thinking evolve beyond our unaided reach.

So maybe that's the wrong question to be asking…

The real question

The viral MIT study, the Reddit debates, the cultural anxiety, they all assume we're passive recipients of whatever AI does to us. That we'll inevitably become cognitively lazy because the technology makes laziness possible. That our minds will automatically atrophy from disuse.

But this framing misses one important element: choice.

The real question isn't "Is AI the end of thinking?" It's "Will we keep thinking once AI enters the room?"

This distinction matters because it shifts us from victims to agents. From asking whether AI is inherently good or bad to asking how we choose to engage with it. From worrying about inevitable outcomes to taking responsibility for intentional practices.

Every conversation with AI is a choice point. We can treat it as a shortcut to avoid the hard work of thinking, or as an instrument that makes our thinking more rigorous, more creative, more expansive than it would be alone.

Which is why this article is about how we can stay thinkers in an age of machines that can think too. We’ll explore:

How AI redistributes cognitive effort, altering where our attention and judgment are needed most.

A simple three-part framework for treating AI as a thinking partner rather than a shortcut.

17 short prompts drawn from classic critical thinking frameworks that make AI conversations sharper and more useful.

Why critical thinking feels different with AI

There's something much deeper happening that explains why AI feels so threatening to our sense of intellectual identity.

Throughout history, we've gradually outsourced cognitive abilities to our tools. We stopped memorizing epic poems when we had books. We stopped doing complex arithmetic in our heads when calculators became ubiquitous. We stopped remembering phone numbers when smartphones arrived.

Each time, we adapted. We found new ways to use our minds.

But AI feels different because it's not just handling one cognitive task, it's doing the kind of thinking and work we've always considered uniquely human.

We're witnessing our cognitive processes being replicated and, in many cases, exceeded by machines. The way we think and what we think about, is changing in real time. No wonder it feels threatening.

But to understand exactly how this shift is happening, we need to look at what AI actually does to the cognitive work itself.

How AI redistributes cognitive effort

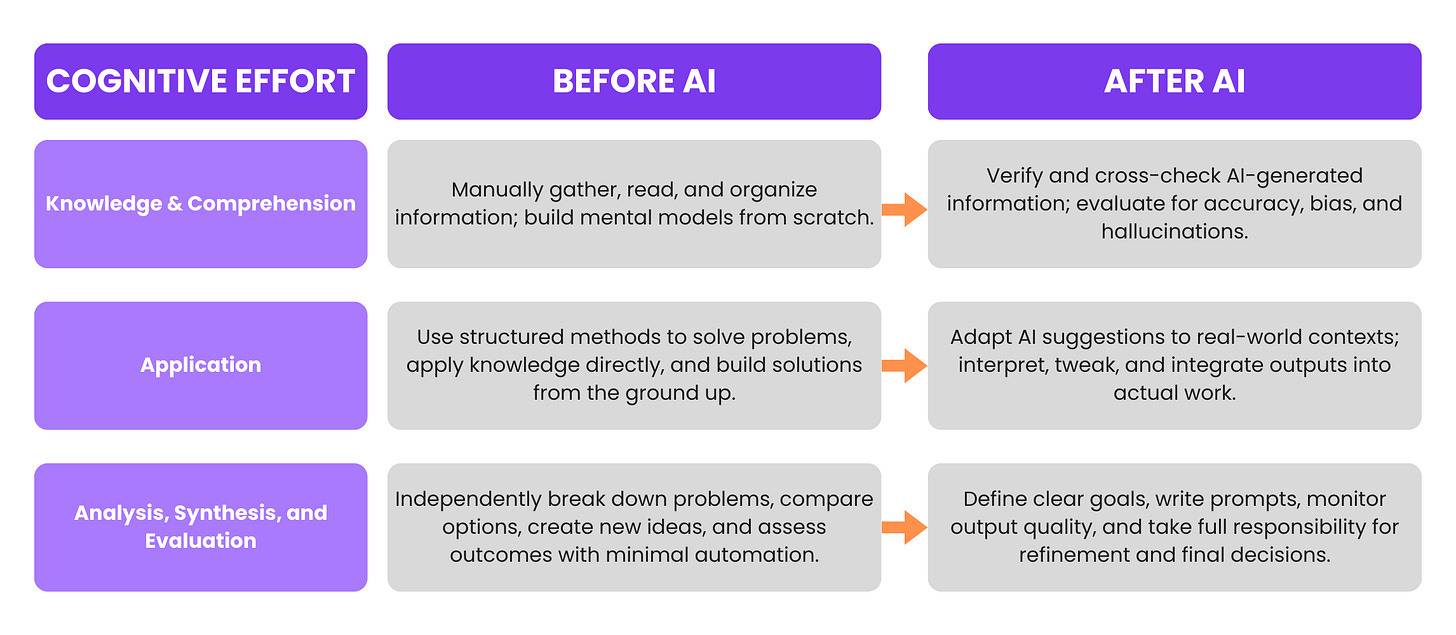

Microsoft research reveals exactly how this shift plays out across different levels of thinking:

Knowledge & Comprehension: Instead of spending time gathering information, we spend it verifying what AI gives us. The work moves from retrieval to validation (checking for hallucinations, catching errors, ensuring accuracy).

Application: The effort shifts from problem-solving to integration. AI can suggest solutions, but we have to adapt those suggestions to our specific context, ensure they fit real-world constraints, and sometimes discover that custom tailoring takes more work than starting from scratch.

Analysis & Evaluation: This becomes about stewardship. AI can scaffold complex analysis, but we have to get clear on what we want, translate our intentions into effective prompts, and keep steering the output toward our standards. Unlike working with a human partner, the responsibility doesn't get shared, it ultimately rests with us.

This redistribution creates something entirely new: a form of thinking that's neither purely human nor purely artificial, but emerges from the tension between our judgment and the machine's capabilities.

The question is whether we'll rise to meet these new cognitive demands or let them atrophy from neglect.

A simple (but not easy) framework for thinking critically when working with AI

To counter our brain's desire for easy answers, we need a system that forces us to slow down and engage our deliberate thinking (System 2).

Here is a simple three-part framework that covers the what to do before, during, and after an AI conversation to ensure we're using it as a true thinking partner.

1. Before: We think first

The most important work happens before we even open the chat window. We need to take a moment to reflect on the challenge before typing it in: What do we already believe? What are we trying to solve? What outcome are we hoping for?

Defining this starting point is the first step in engaging our own mind, before AI steps in.

2. During: We ask better

How we ask matters: the way we phrase the question, the context we provide, and our awareness of the biases that might shape our prompt. All of this determines the quality of the response.

A lazy, leading question gets a lazy, agreeable answer, while a thoughtful, neutral question gets a more objective and useful one.

3. After: We challenge the answer

What we do next is key. The real thinking happens after our AI chatbot responds. We need to read the answer, judge it, challenge it, and build our follow-up questions from there.

We should never treat the first response as the final word, but as the starting point for a deeper conversation.

Is this system hard to implement? Yes. It slows us down. But it's the only way to ensure AI becomes the second brain everyone talks about, instead of a replacement for our first one.

The bridge between knowing and doing

Even some of the smart people who are excellent critical thinkers in their regular work abandon their usual rigor when talking to AI. Something about the interface makes us lazy. We skip the process we’d naturally do with human colleagues, like slowing down to explain clearly and provide the context they’d need to help.

It's almost like we expect the model to read our minds, then get frustrated when it doesn't.

And since most of us don’t know or can’t remember prompt structures in the heat of real work, we need something simpler: an arsenal of short questions that don't take long to type but still force us to pause and think.

At the very least, this is the minimum we owe ourselves if we want to stay engaged rather than slip into autopilot.

17 short prompts for deeper thinking

Usually, I advocate for longer, more carefully crafted prompts because they’re excellent predictors of output quality, and the very process of crafting them brings clarity of mind. But starting is more important than doing nothing, so these short questions offer a bridge between knowing better and doing better.

The prompts here are based on well-established thinking frameworks you might already know. They can help you catch problems before they become costly mistakes and turn AI from a risky gamble into a dependable thinking partner.

But remember: none of these replace the crucial first step of thinking through the problem yourself before you start typing. That step also forces you to decide what context is relevant - what background, constraints, or data the AI needs to give a useful answer.

1. 5 Whys: uncover the real root cause instead of fixing surface symptoms

This method helps you find the root cause of a problem. You start with the problem, then keep asking “why?” until you get past surface-level answers and uncover what’s really driving it. It keeps you from fixing symptoms instead of causes.

Prompt:

“Here’s the problem. Ask me ‘why’ until we find the root cause.”2. Problem Restatement: see fresh angles by reframing the problem in new ways

The first way you describe a problem isn’t always the best. Restating it in different ways opens up new angles and can completely change how you solve it.

Prompt:

“Here’s the problem. Give me 3–5 alternative framings that shift perspective, not just wording.”3. Inversion & Guardrails: spot failure modes early and build safeguards before they appear

Most plans don’t collapse because of bad luck, they fail because of blind spots we didn’t prepare for. Instead of only asking how to succeed, flip the question: how could this fail, and how can you protect against it? Looking at failure modes first helps you build guardrails before they show up.

Prompt:

“Here’s my goal. Show me the biggest ways this plan could fail, what would cause them, and the guardrails I can put in place now to avoid sabotaging it later.”4. Socratic Questioning: sharpen your thinking by exposing hidden assumptions and weak logic

This is about digging deeper with questions instead of accepting things as given. You test assumptions by asking things like “What do you mean by that? What evidence supports it? What if the opposite were true?” It sharpens your ideas.

Prompt:

"Question me like Socrates about this idea until I hit the core principle or expose a contradiction."5. Outside View: stay realistic by looking at similar cases

We often get trapped in our own perspective. The outside view asks: how did similar projects turn out? This gives you a reality check from past patterns.

Prompt:

“Here’s my plan. Based on what usually happens in comparable cases, how likely is it to succeed or fail, and why?”6. Devil’s Advocate: strengthen ideas by forcing them to withstand tough criticism

This forces your idea to stand up against criticism. By having the AI argue against it, you expose weaknesses you might ignore. If your idea survives, it’s stronger.

Prompt:

“Here’s my idea. Argue against it as strongly as possible.”7. Red Team / Blue Team: stress-test plans with a structured debate of strengths vs. weaknesses

This is like a structured debate. One side defends your idea (blue team), the other side attacks it (red team). It shows you both strengths and weaknesses.

Prompt:

“Here’s my plan. Run a Red Team vs Blue Team debate with multiple arguments on both sides. Summarize where the defense is strongest, where the attack is strongest, and what that means for me.”8. Unintended Consequences: anticipate ripple effects and side impacts before they catch you off guard

Every action creates ripple effects, many of them invisible at first. By asking where things could backfire or produce side effects, you avoid short-term wins that turn into long-term problems. This lens pushes you to look beyond the obvious outcome.

Prompt:

“Here’s my plan. What edge cases might I be missing now that could lead to unintended consequences later?“9. Steelman: understand the other side fully by building its strongest possible case

Instead of making the other side look weak, you make the strongest possible version of the opposing view. This helps you understand it better and strengthens your own position.

Prompt:

“Here’s my position. Build the strongest possible version of the opposing view, as if its smartest defender wrote it."10. Triangulation: cross-check claims with multiple perspectives for a clearer picture

No single source is perfect. By looking at the problem from multiple perspectives (data, expert opinion, case studies), you get a clearer and more reliable picture.

Prompt:

“Here’s my claim. Test it from three independent angles: 1) what the data suggests, 2) what experts or research say, and 3) what past real-world cases show. Where do they align, and where do they conflict?"11. Barbell Strategy: balance safety and upside by testing extremes in parallel

Borrowed from investing, the barbell strategy means exploring extremes instead of just the middle. One path is ultra-conservative, another is high-risk/high-reward. Running both in parallel can balance safety and upside.

“Show me one ultra-conservative way to do this, and one high-risk/high-reward way. How could I run both in parallel?”12. Multiple Models: see the problem more fully by layering different mental lenses

No single lens explains everything. Looking at a problem through different models (e.g. economics, psychology, systems thinking) gives you a fuller picture.

Prompt:

“Here’s the situation. Apply multiple mental models to it (psychology, economics, strategy). Compare how each would frame the problem.” 13. Falsification: replace wishful thinking with ideas that survive real evidence tests.

Most ideas sound good until you ask the harder question: what evidence would prove this wrong? Falsifiability forces you (and AI) to stop defending an idea and start testing it.

Prompt:

"Here’s my claim/idea. Check current evidence with sources that could prove it wrong.”14. Pros/Cons/Alternatives (PCA): avoid tunnel vision by weighing trade-offs and alternatives together.

Pros and cons alone can be limiting. By forcing yourself to add at least one alternative option, you make better comparisons and avoid tunnel vision.

Prompt:

“Here’s the answer. Give me pros, cons, and one alternative.”15. Reframing Setbacks: Turn obstacles into opportunities and keep momentum moving forward

How we interpret setbacks shapes how we respond. Reframing in the moment is difficult, but AI can help us step back, question limiting beliefs, and see opportunities we might otherwise miss.

Prompt:

“Here’s the setback I’m facing. Help me reframe it as an opportunity. What lessons, strengths, or advantages could come from it?”16. Hanlon’s Razor: save mental energy by looking for non-malicious explanations first

Not everything goes wrong because someone’s out to get you. More often, mistakes, miscommunication, or chance are to blame. By deliberately asking for non-malicious explanations, you stop yourself from jumping to the worst conclusion in work, relationships, or your own analysis.

Prompt:

“Here’s what happened. Give me 3 non-malicious explanations for it.”

17. Incentives: reveal what truly drives behavior by mapping hidden motivators

Using this lens helps you see why deals stall, why systems keep producing the “wrong” outcome, or what’s really driving behavior in news and politics, opening your eyes to dynamics you might otherwise miss.

Prompt:

“Map out the hidden incentives here. Who benefits, who loses, and how does that shape behavior?”Which of these prompts would you actually use in your daily work? Share your favorites in the comments.

Human + AI: A choice, not a fate

So, is AI the end of thinking? I’d say no. But it is a fundamental redistribution of how we think.

The risks of passive engagement are real. When we consistently choose the path of least resistance, when we accept first answers without verification, when we let AI do our thinking for us, we do risk losing the very cognitive muscles that make us effective thinkers.

But when we bring our full cognitive capacity to AI collaboration — when we think first, ask better, and challenge the answers — we create something more powerful than either human or artificial intelligence alone. We develop a form of augmented thinking that preserves what’s essentially human about reasoning while leveraging what’s uniquely powerful about computation.

The prompts I’ve shared here aren’t about getting better outputs from AI. They’re about transforming our relationship with these tools entirely. Instead of being task delegators who simply ask AI to do things for us, these questions help us pause and engage more deeply. They surface insights and perspectives we wouldn’t discover on our own, turning AI interaction into intellectual partnership.

But this requires treating AI as a thinking partner, not a thinking replacement. It means accepting the additional cognitive burden of stewardship. It means choosing the harder path of engagement over the easier path of delegation.

The technology itself is neutral. What matters is how we choose to dance with it, whether we lead or follow, whether we remain intellectually curious or become cognitively passive.

The solution isn’t to avoid AI, it’s to engage with it more thoughtfully.

Your next move

Pick your top 5 favorites from this list, the ones that feel most relevant to your work. Memorize them or save them somewhere easily accessible. Then start applying them in every AI conversation.

Notice how they change not just what AI gives you, but how you think about the problems you're trying to solve.

Every interaction with AI is a vote for the kind of mind you want to become. That choice is ours to make, one conversation at a time.

How I can help you beyond this newsletter

I also work one-on-one with a few people to help them integrate AI into their workflow so they can save time, reduce friction, and scale what matters most. If that’s what you’re after, just hit reply.

Till next time,

Daria

Love this! I got into some serious trouble due to AI brain during my last holiday, so great to read about ways to fight back :) https://metacircuits.substack.com/p/is-your-brain-over-reliant-on-ai

Avoiding AI isn’t principled—it’s opting out. The real work is building practices that keep us intellectually awake while we use them.

What’s one practice you won’t compromise on?