I Built a Tool to Prove the ‘Prompts Aren’t Valuable’ Myth Wrong

Struggling with generic AI outputs? Try my Prompt Generator to see how good prompts change everything. Free credits for premium readers.

In my first months of using AI, I used to blame every weak output on the model. I would type something in, get a generic answer, and close the tab too quickly, thinking, "Alright, it's just not capable of this."

Then the problem became impossible to ignore when I started building AI products with my friend Matei. Every day I would sit there with prompts that just didn't work. They refused to deliver what I wanted, no matter how many times I rephrased them.

But then I would see what other people were building. Their prompts actually worked, and their outputs were just… incredible.

There was something deeply frustrating about watching someone else use the same tools to create something that delivered great results while I was still fighting with basic functionality. The mismatch was impossible to ignore. Same models, same interfaces, completely different results.

That was the moment it clicked for me. The problem wasn't the machine. It was me.

I wasn't clear enough. I didn't know how to guide it properly. My prompting skills were weak, and I couldn't translate the clarity I had in my head into instructions the machine could understand. I couldn't bridge the gap between my thinking and the output I was aiming for.

That realization sent me down a long rabbit hole of studying prompts. I started breaking down the work of the best practitioners word by word. Testing them, tweaking one piece at a time, watching how the output shifted, then building my own and running the same experiments again.

Over time, I began to see prompts less as simple inputs and more as a way of pausing just enough to get it right. Slower at the start, but far quicker than reworking draft after draft because it never quite lands where you need it to.

Most people are still exactly where I used to be

I notice this every day now. In conversations with people around me. In Reddit threads where people complain AI "sucks". In posts where outputs are shared as proof the tools don't deliver.

Underneath those complaints lies the same problem I once had: those prompts are half-formed thoughts with no structure behind them. They're missing the techniques that actually make AI work: context, clear direction, and the craft of turning your thinking into something the machine can follow.

The consequences are visible everywhere. Hours lost rewriting bad drafts. Generic answers passed off as insight. People blaming the model and walking away while others, with the same tools, are producing strategies worth paying for, workflows worth running, writing worth publishing.

The gap, most of the time, isn't in the technology. It's in how well we have learned to work with it.

But learning those techniques takes time. The same months of studying, testing, and refining that I went through. Most people don't have that luxury. They need results now, not after a long apprenticeship in prompt engineering.

So I started thinking: what if I could take everything I learned from testing and refining hundreds of prompts and build something that does that work for you, so you can achieve that same level of quality?

The solution: a Prompt Generator that turns any rough idea into a reliable prompt

That’s what led me to create the Prompt Generator. Instead of expecting people to repeat the same cycle of trial and error I went through, I wanted to capture everything I’d learned in a way that makes prompting skills accessible from the start and helps you get results without the wasted time.

P.S. Free subscribers get 30 credits to test it out. Premium readers will get a separate email with a code that unlocks 150 extra credits.

Here's how it works

Expands any rough idea into a full, detailed prompt (over 1,000 words), built with the right structures and prompting techniques so it’s reusable and consistently delivers high-quality results.

Builds a step-by-step plan directly into the prompt, so when you run it through your LLM, the model reasons through the task instead of rushing to surface answers. That way the final output is more thorough and closer to how an expert would approach it.

Draws on credible knowledge (best practices, limitations, and real-world methods) and embeds them into the prompt. This anchors AI to respect proven guidelines, almost like recursive prompting, but without you having to build the knowledge step by step.

Guides you through adding context, the missing step behind most bad outputs, so AI doesn’t default to assumptions and your results are tailored and specific.

Self-checks for accuracy and completeness, catching hallucinations and checking the output against credible guidelines for how that task is done well. Each prompt is held to a high standard before you see the results.

The result is prompts that don’t just generate answers but set a higher bar for the work itself.

And that’s why it always surprises me when I hear people say prompts aren’t valuable, that you can achieve the same outputs without them. But instead of debating it, let me show you the difference side by side.

Bad prompts vs. good prompts

To show you the difference in output between a bad prompt and a good one, I ran a simple test.

I started with the kind of lazy prompt most people type into their AI chatbot, the one-liner command you throw in and hope for the best. I pasted it in to see what it produced.

Then I took that same lazy prompt, ran it through the Prompt Generator, and used the mega-prompt it created. I filled in the context it asked for so the results would be specific to my situation.

Let's see how they performed on a few examples:

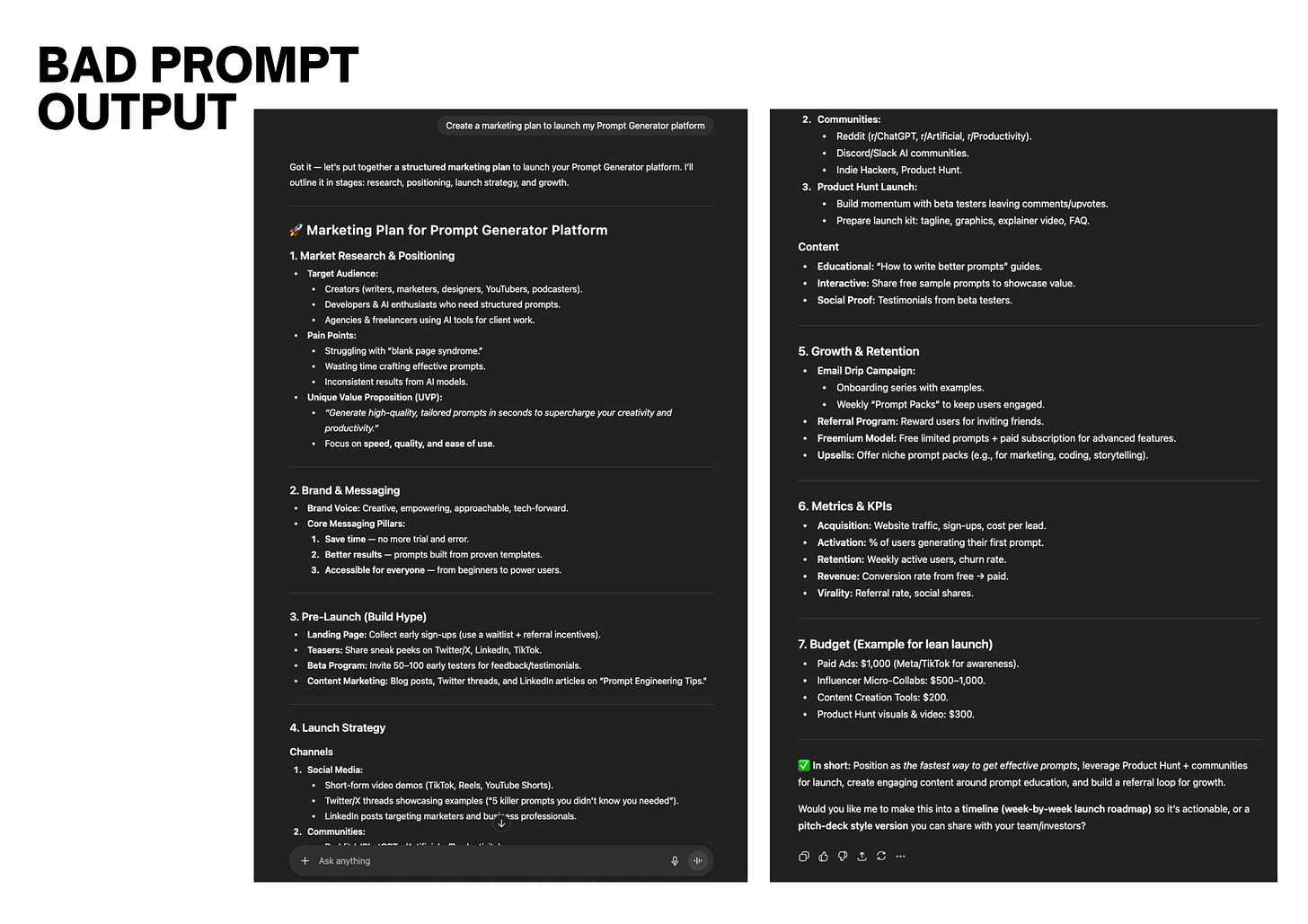

Example 1: Creating a Marketing Plan

Typical lazy approach: "Create a marketing plan to launch my Prompt Generator platform" gave me a generic playbook you'd find in any business blog.

Good prompt approach: Produced a 19-page marketing plan with specific budgets, KPIs, timelines, and risk assessments (the kind of document companies pay thousands for), while still leaving placeholders for the data I needed to fill in.

Example 2: Creating Notes for Substack

Typical lazy approach: "Help me create Substack notes about AI". The result was short, generic posts that didn’t match Substack’s format or my own writing style.

Good prompt approach: Produced content in my actual voice with compelling hooks, focused on the kinds of topics I usually write about rather than AI news. These are notes I’d actually publish.

The difference in output speaks for itself

Looking at these side-by-side comparisons it becomes clear: the myth that “prompts aren’t valuable” just doesn’t match what happens in practice.

A good prompt isn’t just a one-liner. It pulls together three things from the start:

Prompting techniques - how you effectively communicate with the machine and guide its process.

Expertise and constraints - frameworks, examples, or mental models that give the AI something concrete to work with.

Context that matters - the specific details that prevent generic outputs and make the result actually applicable to your situation.

That’s exactly why I built the Prompt Generator the way I did. It gives you prompts that already have structure and expertise built in. All you do is add your context, and you’re starting from a much stronger place.

Unlock extra credits for the Prompt Generator, plus step-by-step playbooks, automations, insider shortcuts, and priority support.

When a chat is enough vs. when you need a prompt

Not every AI interaction calls for a carefully built prompt.

Sometimes it makes more sense to have a conversation with the model, especially when you’re still exploring and haven’t made up your mind yet. Other times, when the work has to meet a higher bar, a strong prompt gives you a far better starting point.

Here’s how I see the difference:

→ When conversation works best

You’re exploring ideas and don’t yet know what you want

You’re clarifying your own thinking through back-and-forth

The task is simple (summaries, small edits, brainstorming lists)

The stakes are low and speed matters more than polish

→ When a structured prompt is essential:

The task is recurring and you need consistent quality each time

The work will be judged by others (clients, colleagues, readers)

You’re outside your expertise and need reliable guidance

The problem is complex and requires depth, not surface answers

That’s what this tool is designed for: those moments where the difference between “good enough” and “good work” really matters.

Why this matters more than you think

The gap between good and bad prompting isn't just about getting better outputs - it's about changing your relationship with AI. When you learn to communicate effectively with these systems, several things happen:

Quality markers get defined. Instead of vague requests, you learn to specify exactly what professional-quality work looks like in your context.

Context becomes clear. You stop expecting AI to guess what you want and start providing the background information it needs to deliver relevant results.

Standards elevate. You develop the ability to evaluate AI's work with nuance, knowing when it's aligned with best practices and when it falls short.

That’s the progression that shifts AI from hit-or-miss outputs to something you can rely on in your work.

Because your success with AI won't be determined by access to the latest model or the most advanced features. It will be determined by your ability to translate what you need into language that gets results.

The tools are ready. Your ideas are waiting. All that's left is bridging the gap between them.

Access the Prompt Generator

If you're a free subscriber, you get 30 credits when you create your account so you can test it out.

If you’re a paid subscriber, I’ve set up a code that gives you 150 extra credits. Keep an eye on your inbox today for the email.

Try it out and let me know how the Prompt Generator works for you, what you think of the outputs, and the results you’re getting. If you have any questions, just reply to this email or DM me.

Till next time,

Daria

It’s wild how much the quality of AI answers depends on how you ask. Just changing a few words or adding the right context can turn a so-so reply into something game-changing. Thanks for all the insights!!

Learning the skills required to create great prompts requires a ton of time investment. This is an awesome tool!