I can’t believe I’m writing this today… and actually sharing the launch of the AI Blew My Mind podcast.

A podcast that’s fully AI-voiced.

Can you believe this is even possible?!

I still can’t.

A few weeks ago, I read

’s post on NotebookLM. Ever since, I’ve been using the tool to upload my own resources and listen to them instead of reading. The sound quality is unreal and it’s super engaging. You honestly can’t even tell it’s AI-powered and not human.And then today, while playing around with it, this idea hit me:

What if I added podcasts to my own written posts?

We’re at this incredible point in time where so much is possible with AI, and I just feel so lucky to be living through it.

So today’s episode (and this post) dives into a question that’s fascinated me:

Can AI really be creative?

In this post, you’ll explore:

Why AI often feels repetitive and what to do about it

The exact prompts and frameworks I use to spark better ideas

Surprising research that reveals the best way to boost AI creativity and diversity of ideas

The #1 prompting technique (backed by data) that changes everything

Facing the limits of AI creativity

We all know AI can brainstorm fast.

But it often gives us a bunch of ideas that feel… the same.

I remember when I was creating stories for my own startup’s Instagram page. Each day I’d write a motivational quote and post it as a story. Since ChatGPT became so mainstream, of course, instead of manually researching quotes myself, I started using GPT for it.

Every time I told it, “Give me 100 quotes on [topic],” it would repeat the ideas.

Then I’d add, “Do not repeat the quotes under any circumstances.” It would still repeat them.

Back then, I didn’t know much about prompting, but I remember it being quite a struggle.

Out of a whole thread with hundreds of quotes, I’d only end up choosing the equivalent of one month’s worth that were actually good.

And that whole struggle with quotes wasn’t a one-off.

I’ve run into the same problem in lots of different contexts, whether it was for product ideas, marketing angles, or workshop exercises, it always felt like GPT would eventually start circling back to the same familiar territory.

No matter how big the list was, after a while, everything started to blur together.

How I discovered smarter prompting

Then, I started discovering all these new voices in the AI space sharing their own prompts and when I tried them out, I was blown away by the results.

That’s when I actually got curious about why these prompts worked so well, and it pushed me to dive even deeper into the world of prompt engineering.

Along the way, I gathered so many useful lessons that I put them into a dedicated post for anyone curious to dig deeper:

ChatGPT Not Working? Here’s the Prompting Fix I Use

Whether you’re using AI to write, brainstorm, plan, or build, this post breaks down how to actually get the results you want. I’ll walk you through simple but powerful prompting techniques, plus real examples you can copy and adapt.

Frameworks that made GPT more creative

One of the biggest things I learned is that you don’t need to reinvent the wheel to get GPT to be more creative.

Sometimes the magic happens when you introduce frameworks we already know into your prompts.

Even though GPT is already “trained” on these frameworks, explicitly guiding it to use them, combined with smart prompting techniques, made a huge difference in the variety and creativity of the ideas it produced.

Here’s a sneak peek into some of the frameworks I use to help GPT to be more creative:

Design Thinking – Empathy Stage:

Identify 5 different types of users for [product/service]. For each user type, describe their main goals, frustrations, and unmet needs in 2–3 sentences.SCAMPER Framework (for idea improvement):

Use the SCAMPER method to improve [product/idea]. Go through each step: Substitute, Combine, Adapt, Modify, Put to another use, Eliminate, Reverse, and list at least 3 ideas per step.Jobs To Be Done Framework:

List 10 specific ‘jobs’ that [target audience] is trying to get done in relation to [topic]. For each job, brainstorm 2 innovative solutions or product ideas that could help them accomplish it better.First Principles Thinking:

Break down [problem] into its core components by asking: What is it made of? Why does it exist? What assumptions are we making? Then suggest 3 creative ways to solve it by rethinking from the ground up.What/Why/How Model:

First explain what the main challenge is for [topic]. Next, explain why this challenge matters to users or customers. Finally, brainstorm 5 new ways to solve the challenge, making sure each solution is clear, actionable, and different from common approaches.

Figure Storming:

Take on the persona of [famous figure: e.g., Elon Musk, Oprah, Albert Einstein]. How would they approach [your challenge]? List 5 creative ideas that reflect their thinking style.Role Storming:

Imagine you are [specific user: e.g., a frustrated customer, a new employee]. Act as if you are experiencing [problem]. What ideas would you propose to solve it? List at least 5 practical suggestions from that user’s perspective.Reverse Brainstorming:

Instead of asking how to solve [problem], ask: How could we cause [problem] or make it worse? List 5 ways to create the issue, then reverse-engineer each one into a solution that prevents it.Reverse Thinking:

Ask: What would [competitor/someone outside your industry] do in this situation? List 3 approaches they might take. Then evaluate: Would their strategy work better or worse than your current plan, and why?

Adding these kinds of structured prompts gave GPT a clearer direction, and the results were noticeably more creative, useful, and diverse.

Have a favorite framework of your own or tried one of these prompts? I’d love to hear how it worked for you. Drop your thoughts in the comments below!

What the research reveals about AI creativity

Up until now, everything I shared was based on my own trial and error, just figuring things out as I went.

But recently, I discovered a research paper that took things to a whole new level:

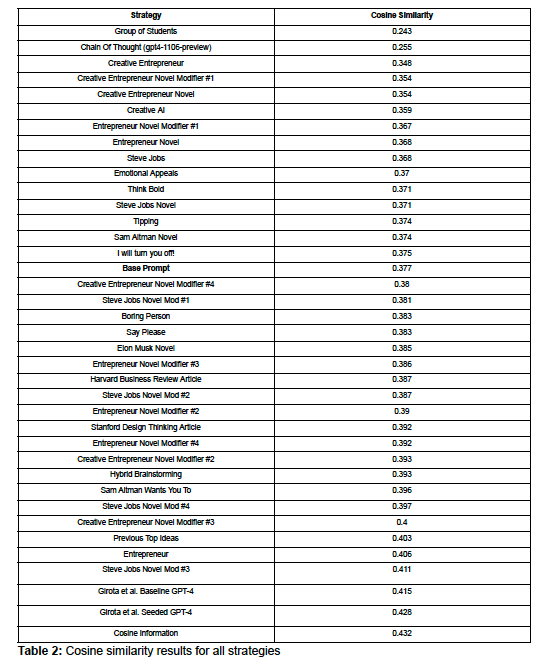

It tested 35 different prompting strategies to uncover what actually helps GPT-4 generate more diverse and original ideas.

What stood out to me is how much their findings echoed my own experience, but with real data and testing behind it.

Inside the results: prompting that changes everything

The study showed that while GPT-4 is great at generating ideas fast, it tends to fall into familiar patterns, producing ideas that are less varied than those from human brainstorming sessions.

They measured idea diversity using cosine similarity (a method that checks how close or different ideas are) and found that while human brainstorming produced highly varied ideas, GPT-4’s ideas tended to be noticeably more repetitive.

So yes, if you’ve felt that AI can get repetitive just like I did, you’re not imagining it.

But the good news is, the researchers identified what actually helps you prompt AI to get more creative and diverse ideas.

They tried 35 strategies to shake things up, including:

Telling GPT-4 to “think like Steve Jobs”

Using design thinking principles

Incorporating Harvard Business Review brainstorming methods

Assigning roles or personas (like thinking as a futurist or innovator)

Using step-by-step breakdowns (hello, Chain-of-Thought!)

Prompting GPT-4 to argue against its own ideas to unlock new angles

Each strategy significantly influenced how creative and varied the AI’s outputs was.

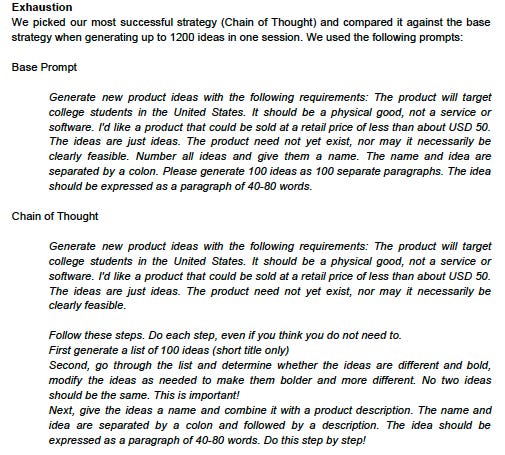

The #1 strategy for unlocking diverse, original ideas

This table shows the cosine similarity scores for each prompting strategy the researchers tested. In simple terms, lower scores mean the ideas were more diverse and creative, while higher scores indicate more repetitive, similar ideas.

The human brainstorming group appears at the top as a benchmark for true diversity, and you can see that Chain-of-Thought (CoT) prompting comes closest to matching that human-level creativity, proving how effective this strategy really is.

Instead of asking GPT-4 to brainstorm ideas all at once, CoT breaks the task into smaller, logical steps, essentially guiding the AI to think out loud before delivering final ideas. This added structure pushes the AI to explore deeper angles and consider different pathways, leading to much more varied and original results.

In the study, CoT significantly expanded the pool of unique ideas and helped the AI avoid falling into repetitive patterns too quickly.

What’s even more interesting is that the researchers didn’t stop there. They tested hybrid approaches, combining CoT with other strategies like assigning a creative persona.

These combos amplified creativity even more, showing that layering techniques can push AI brainstorming far beyond what a single strategy can achieve.

Why this changes the game

The clear takeaway from all of this is that prompting isn’t just a detail, it’s everything.

It shows that how you ask is just as important as what you ask for. A tiny tweak to your prompt can be the difference between AI spitting out the same old stuff… or generating something fresh, useful, and actually innovative.

And this matters for anyone creating with AI or using it as a brainstorming partner, whether you’re writing content, developing products, solving business challenges, or just exploring new ideas. The more clearly you guide the AI, the more original, useful, and varied the results will be.

Prefer to listen?

This post’s podcast episode is fully based on this research paper. So if you want to hear all these insights in audio form, hit play at the top of this post and check it out!

And if you’re the kind of person who loves to dig into the original source material, here’s the full paper once again: Prompting Diverse Ideas: Increasing AI Idea Variance.

Share this post